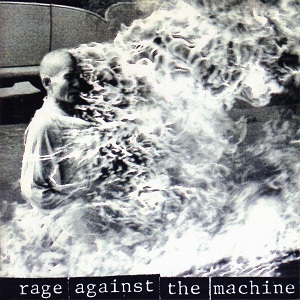

Data From Virtual Reality Headsets can Give Corporations & Governments Unprecedented Insight & Power Over Our Emotions & Physical Behavior

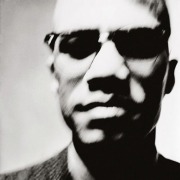

/WHY DO I look like Justin Timberlake?”

Facebook CEO Mark Zuckerberg was on stage wearing a virtual reality headset, feigning surprise at an expressive cartoon simulacrum that seemed to perfectly follow his every gesture.

The audience laughed. Zuckerberg was in the middle of what he described as the first live demo inside VR, manipulating his digital avatar to show off the new social features of the Rift headset from Facebook subsidiary Oculus. The venue was an Oculus developer conference convened earlier this fall in San Jose. Moments later, Zuckerberg and two Oculus employees were transported to his glass-enclosed office at Facebook, and then to his infamously sequestered home in Palo Alto. Using the Rift and its newly revealed Touch hand controllers, their avatars gestured and emoted in real time, waving to Zuckerberg’s Puli sheepdog, dynamically changing facial expressions to match their owner’s voice, and taking photos with a virtual selfie stick — to post on Facebook, of course.

The demo encapsulated Facebook’s utopian vision for social VR, first hinted at two years ago when the company acquired Oculus and its crowd-funded Rift headset for $2 billion. And just as in 2014, Zuckerberg confidently declared that VR would be “the next major computing platform,” changing the way we connect, work, and socialize.

“Avatars are going to form the foundation of your identity in VR,” said Oculus platform product manager Lauren Vegter after the demo. “This is the very first time that technology has made this level of presence possible.”

But as the tech industry continues to build VR’s social future, the very systems that enable immersive experiences are already establishing new forms of shockingly intimate surveillance. Once they are in place, researchers warn, the psychological aspects of digital embodiment — combined with the troves of data that consumer VR products can freely mine from our bodies, like head movements and facial expressions — will give corporations and governments unprecedented insight and power over our emotions and physical behavior.

VIRTUAL REALITY AS a medium is still in its infancy, but the kinds of behaviors it captures have long been a holy grail for marketers and data-monetizing companies like Facebook. Using cookies, beacons, and other ubiquitous tracking code, online advertisers already record the habits of web surfers using a wide range of metrics, from what sites they visit to how long they spend scrolling, highlighting, or hovering over certain parts of a page. Data behemoths like Google also scan emails and private chats for any information that might help “personalize” a user’s web experience — most importantly, by targeting the user with ads.

But those metrics are primitive compared to the rich portraits of physical user behavior that can be constructed using data harvested from immersive environments, using surveillance sensors and techniques that have already been controversially deployed in the real world.

“The information that current marketers can use in order to generate targeted advertising is limited to the input devices that we use: keyboard, mouse, touch screen,” says Michael Madary, a researcher at Johannes Gutenberg University who co-authored the first VR code of ethics with Thomas Metzinger earlier this year. “VR analytics offers a way to capture much more information about the interests and habits of users, information that may reveal a great deal more about what is going on in [their] minds.”

The value of collecting physiological and behavioral data is all too obvious for Silicon Valley firms like Facebook, whose data scientists in 2012 conducted an infamous study titled “Experimental evidence of massive-scale emotional contagion through social networks,” in which they secretly modified users’ news feeds to include positive or negative content and thus affected the emotional state of their posts. As one chief data scientist at an unnamed Silicon Valley company told Harvard business professor Shoshanna Zuboff: “The goal of everything we do is to change people’s actual behavior at scale. … We can capture their behaviors, identify good and bad behaviors, and develop ways to reward the good and punish the bad.”